Artificial Intelligence (AI) technology and chatbots have been around for some time, but it’s news to no one that they have never made a bigger impact than the last few years. The public rollout of generative AI tools like ChatGPT, Claude, or Midjourney has changed the way we work, find information, and manage everyday tasks.

But generative AI tools have also proven to be an asset for bad actors. In early 2024, a finance worker in Hong Kong was tricked into transferring $25 million after joining a video call where every colleague was an AI-generated deepfake.

This wasn’t an isolated case. Fraudsters are now using AI-generated voices to impersonate family members in urgent money requests, and large language models to write convincing phishing emails that mimic executives’ styles. These tools make scams faster to launch, harder to detect, and far more scalable.

In this article, we’ll explore how generative AI is amplifying both fraud risks such as synthetic identities and cyber fraud, and how graph analytics can help detect and stop these evolving attacks.

Generative AI lowers the barrier to entry for attackers while multiplying their capabilities. Whether the goal is to steal money, credentials, or personal information, these tools make fraud schemes faster, cheaper, and harder to trace. How?

- Scalability at speed: Fraudsters can use AI to generate thousands of fake identity documents, contracts, invoices, or phishing messages in minutes, turning what once required specialized skills into an automated process

- Hyper-realistic outputs: AI-generated text, images, audio, and video look authentic, making synthetic identities, deepfake scams, and impersonation fraud highly convincing. Victims and verification systems struggle to distinguish real from fake.

- Tailored and flexible: Generative AI makes it easy to customize fraud schemes. Scammers can create personalized phishing emails in a target’s native language, generate fake customer support interactions, or craft convincing backstories for synthetic identities. All of these are adapted to specific targets or institutions.

- Anonymity and reach: Many AI tools can be used without revealing a real identity, enabling bad actors to operate at scale with little chance of being traced.

Financial institutions are particularly vulnerable to new and evolving fraud schemes. In the years since 2020, following the Covid-19 pandemic, digital transformation has greatly accelerated within financial institutions. This provides an increased degree of convenience for banking customers, but it has also contributed to an increased risk of fraudsters exploiting digital systems for their own gain.

When you add generative AI to the mix as an easy tool to create and scale up fraud schemes, the risk only multiplies. The former Federal Trade Commission Chair, Lina Khan, wrote that generative AI is being used to “turbocharge” fraud. And experts in financial crime at major banks have noted that the fraud boom that’s coming because of this type of technology is one of the biggest threats facing the financial services industry.

On the ground, fraud specialists are seeing evidence of this boom. Deloitte’s Center for Financial Services predicts that gen AI could enable fraud losses to reach US$40 billion in the United States by 2027, from US$12.3 billion in 2023, a compound annual growth rate of 32%.

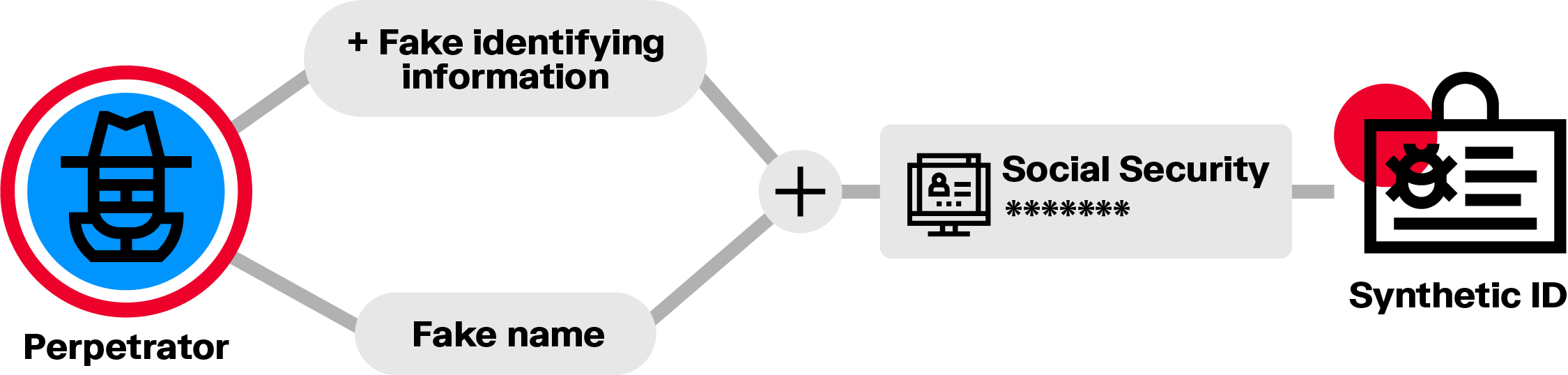

A growing tactic is the use of synthetic identities, false profiles that mix real and fake information, such as a genuine Social Security number paired with a fabricated name. Because they don’t belong to any one person, these identities are hard to detect.

Criminal groups are seizing on this opportunity. In recent months, some criminal groups have started shifting tactics, moving from ransom attacks to synthetic identity fraud.

This type of fraud represents a big risk for banks. Fraudsters can build credit histories under synthetic identities, then max out loans or cards before disappearing. These identities can also facilitate crimes like money laundering.

Generative AI is lowering the barrier for cyber-enabled fraud. While traditional account takeover schemes and credential theft required technical expertises, large language models now allow even low-skilled fraudsters to craft sophisticated phishing campaigns, credential-harvesting tools, and social engineering attacks that adapt in real time to evade detection.

This shift creates new risks for financial institutions and other organizations, as fraudulent access and unauthorized transactions become harder to identify within vast volumes of data. Security agencies such as the UK’s National Cyber Security Centre have already warned that criminals are experimenting with LLMs to refine phishing lures and bypass authentication systems.

AI-enhanced fraud schemes can mimic legitimate user behavior, rotate through compromised identities, and operate undetected inside financial networks, allowing fraudsters to siphon funds, open fraudulent accounts, or launder money before organizations realize they’ve been breached.

Spotting fraud fueled by generative AI is a growing challenge. For financial institutions, onboarding and ongoing monitoring remain critical to identify red flags and anomalies. But conventional monitoring processes require bringing together different data sources to spot suspicious behavior patterns that might warrant further investigation. Without the right tools, detecting low signals within multiple sources of data can be challenging—all the more so as fraudsters increasingly leverage AI to scale and innovate.

Graph visualization and analytics offer a powerful response. By mapping data as a network of entities and relationships, graphs reveal hidden connections and patterns across disparate data sources. This approach makes it easier to detect sophisticated fraud rings, uncover deepfake-driven scams, or trace unusual movements that may indicate a fraud scheme.

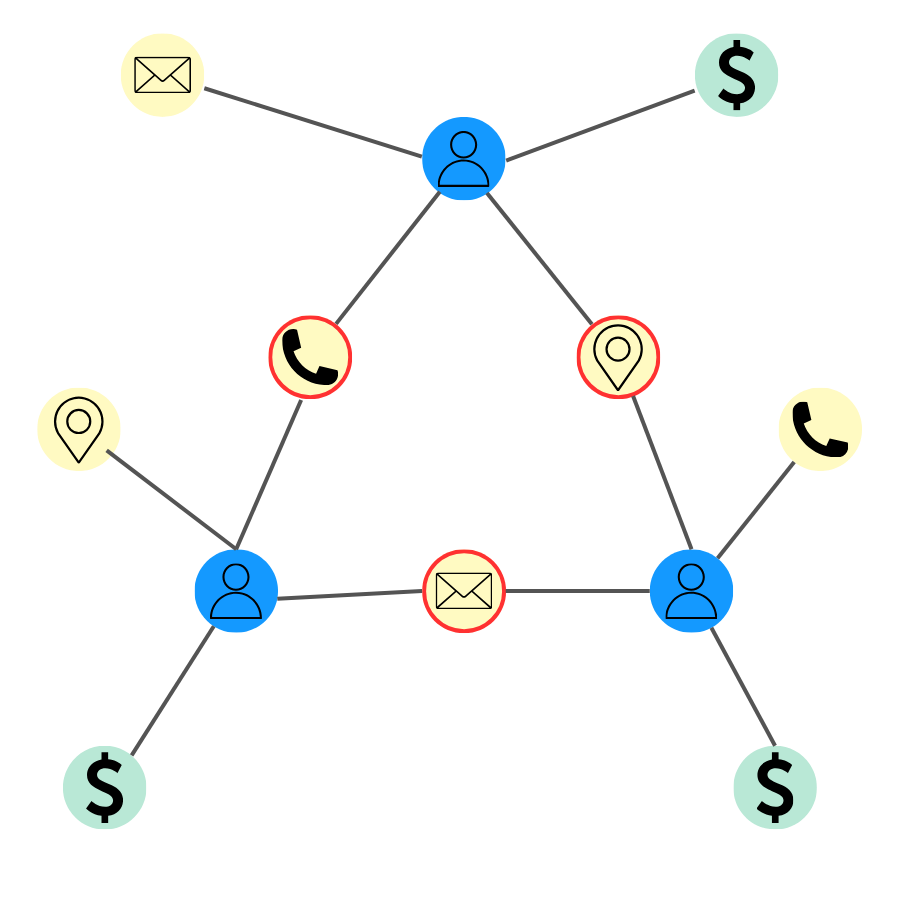

In a graph technology model, data is structured as a network. Individual data points - such as a person or a bank account - are stored as nodes, which are connected to each other by edges, representing the relationships between them. For example, a person has a bank account.

A graph analytics approach is able to combine data from multiple sources, such as customer databases and external data sources, and draw connections between the data within. In displaying both direct and indirect connections, this technology makes it far more effortless to detect complex fraud patterns that would have been difficult or impossible to uncover otherwise.

In the case of synthetic identity fraud, a graph can reveal in a second if there are pieces of data shared by multiple applicants or account holders. Several individuals interconnected across personally identifiable information such as an address, a phone number, a date of birth, etc. may be a red flag that a ring of fraudsters operating with synthetic identities.

Graph algorithms can also identify unusual application patterns, like spikes of applications from a certain location or using similar formatting. Linking these applications back to common devices or addresses again spotlights likely synthetic identities.

By visualizing these connections, analysts and investigators can quickly focus on high-risk groupings of applications and accounts. Timely interventions prevent fraud rings from inflicting major damage. Ongoing graph analysis also allows suspicious entities to be tracked over time even as stolen data elements are recombined into new synthetic identities.

In cyber fraud, attackers often move laterally once inside a financial system, escalating account privileges, manipulating transaction limits, or exfiltrating customer data to enable further fraud.

Graphs can connect signals from user accounts, devices, transaction histories, and access patterns to visualize unusual behavior or anomalous connections that indicate fraudulent activity. For example, an account accessing multiple customer records it has never touched before, credentials being used from new locations to make wire transfers, or multiple compromised accounts funneling funds toward the same destination account, may indicate a coordinated fraud.

By surfacing these hidden pathways and relationships between compromised identities, fraudulent transactions and money movements, graph technology helps fraud teams detect account takeover schemes, credential stuffing operations, or money laundering networks that traditional monitoring tools miss.

Graph technology is a powerful ally in fraud detection because it excels at modeling and analyzing complex webs of entities, behaviors, and connections. Fraudsters depend on hidden networks, whether of synthetic identities, mule accounts, compromised endpoints, or malicious actors, to scale their schemes. Graph analytics exposes those hidden structures and helps analysts spot anomalies that traditional approaches miss.

By continually mapping new entity and relationship data into an evolving graph, investigators can spotlight suspicious individuals, accounts, and activities that may be part of an AI-orchestrated scheme. Graph analytics shines a light into the interconnected dark spaces where fraud breeds – providing the visibility needed to fight back.

Graph visualization and analytics solutions like Linkurious Enterprise let you quickly adapt to emerging threats such as AI-fueled fraud, and quickly analyze new cases. By delivering a holistic view of the networks around clients and transactions, Linkurious Enterprise shines a light on the complex hidden connections in your data to reveal criminal behaviors that would otherwise go undetected. See how it works for yourself.

FAQ Applying graph technology to generative AI fraud detection

1. What is an example of AI fraud?

2. What industries are most at risk from AI-driven fraud?

3. How can organizations protect themselves against AI-driven fraud?

A spotlight on graph technology directly in your inbox.