Confronting the risky side of AI: Applying graph technology to generative AI fraud detection

Artificial Intelligence (AI) technology and chatbots have been around for some time, but never have they made a bigger impact than this year. With the public rollout of generative AI tools like OpenAI’s ChatGPT, Anthropic’s Claude or Midjourney, the power of AI is now at the fingertips of anyone with an internet connection. These tools have proven useful across a wide range of use cases, helping people across industries improve their efficiency and save time on daily tasks.

But generative AI tools have also proven to be an asset for bad actors. We are now seeing scammers leverage generative AI in banking fraud and in other fraud schemes, greatly increasing potential risk and losses. In this article we’ll explore why widely available generative AI tools are creating increased risks for banks and financial institutions, especially around scams like synthetic identity fraud. We’ll also look at how new technologies like graph visualization and analytics can be a key asset in generative AI fraud detection.

Before we take a look at banking fraud specifically, it’s worth understanding why generative AI tools are so attractive to fraudsters across the board, whether they are targeting financial institutions or individual victims.

- Generative AI allows fraudsters to set up schemes with unprecedented efficiency. These tools can churn out tons of fake identity documents, contracts, invoices and other essential scam materials in a matter of minutes. This enables criminals to quickly scale up fraud schemes that can target thousands of victims.

- Because the texts, images and audio that AI can now generate are so human-like, AI-assisted fraud schemes appear highly legitimate on the surface. It becomes very difficult to detect that they were made by a machine, complicating fraud detection efforts.

- Generative AI gives fraudsters a huge amount of flexibility to customize scams. The tools can generate varied, tailored content for each target, making the schemes more believable. Criminals can also easily pivot schemes in new directions in response to changing contexts and to exploit new loopholes.

- Many generative AI tools can be used anonymously. Fraudsters can therefore rely on AI to create convincing scam materials without ever revealing their identity. This makes it much harder for authorities to track down the perpetrators of these fraud schemes.

Criminal uses of generative AI are further facilitated by the current regulatory context - or lack thereof. These tools have only very recently been made available to the general public, and regulation hasn’t yet caught up (1). In the US, Congress is only in the early stages of producing regulatory legislation. The EU has advanced further in proposing legislation as it works on the EU AI Act, but the law has not yet been finalized. For now, prominent generative AI companies have simply agreed to provide minimum safety guardrails on a voluntary basis. This leaves plenty of room for bad actors to use AI tools for shady or illegal activities.

Financial institutions are particularly vulnerable to new and evolving fraud schemes. In the years since 2020, following the Covid-19 pandemic, digital transformation has greatly accelerated within financial institutions. This provides an increased degree of convenience for banking customers, but it has also contributed to an increased risk of fraudsters exploiting digital systems for their own gain.

When you add generative AI to the mix as an easy tool to create and scale up fraud schemes, the risk only multiplies. The Federal Trade Commission Chair, Lena Khan, has written that generative AI is being used to “turbocharge” fraud (2). And experts in financial crime at major banks have noted that the fraud boom that’s coming in part because of this type of technology is one of the biggest threats facing the financial services industry. On the ground, fraud specialists are seeing evidence of this boom. The head of fraud management at the Commonwealth Bank of Australia recently stated that his teams are tracking about 85 million events a day through a network of surveillance tools (3).

There are plenty of ways artificial intelligence can be leveraged to imitate a legitimate person in order to obtain ill-gotten gains. With generative AI, fraudsters can imitate or invent a person using text or even audio or video, generating various types of PII and other assets in mere seconds. This type of identity fraud may be used to carry out phishing campaigns, romance scams, etc. Fraudsters can also use AI to massively scale their fraud schemes. Fraudulent activities often involve multiple, complex steps that can take a long time to carry out. Now, fraudsters can automate much of that work.

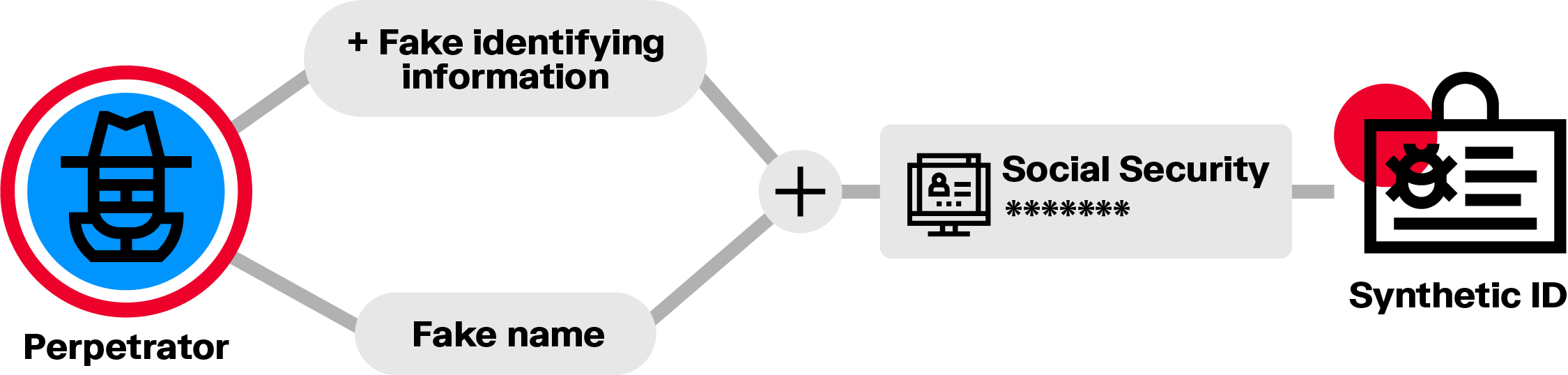

AI tools can also be used to generate fake IDs or synthetic identities that look very believable. Fraudsters can do this at scale. A synthetic identity is a false identity that mixes real and fake personal information. It may include a real social security number (SSN) and a fake name, for example. This information together does not belong to any one real person. With the widespread availability of generative AI, this type of fraud has become even more attractive to bad actors. In recent months, some criminal groups have started shifting tactics, moving from ransom attacks to synthetic identity fraud (4).

This type of ID fraud represents a big risk for banks. A fraudster will often use a synthetic identity to build credit over time, enabling them to eventually max out their credit before disappearing completely, which can generate huge losses. Synthetic identities can also be used for other types of criminal activities that put banks at risk, such as money laundering.

Spotting a fraud scheme can be really challenging. Both onboarding and ongoing monitoring are important for banks to identify red flags for fraud and other shady behavior. But KYC/KYB, customer due diligence (CDD), and other monitoring processes require bringing together different data sources to spot suspicious behavior patterns that might warrant further investigation. Without the right tools, detecting the low signals within multiple sources of data can be tricky - and all the more so as fraudsters leverage AI to scale and innovate.

In response to quickly evolving fraud schemes, graph visualization and analytics technology is a strong asset for financial institutions to efficiently detect and investigate suspicious activities.

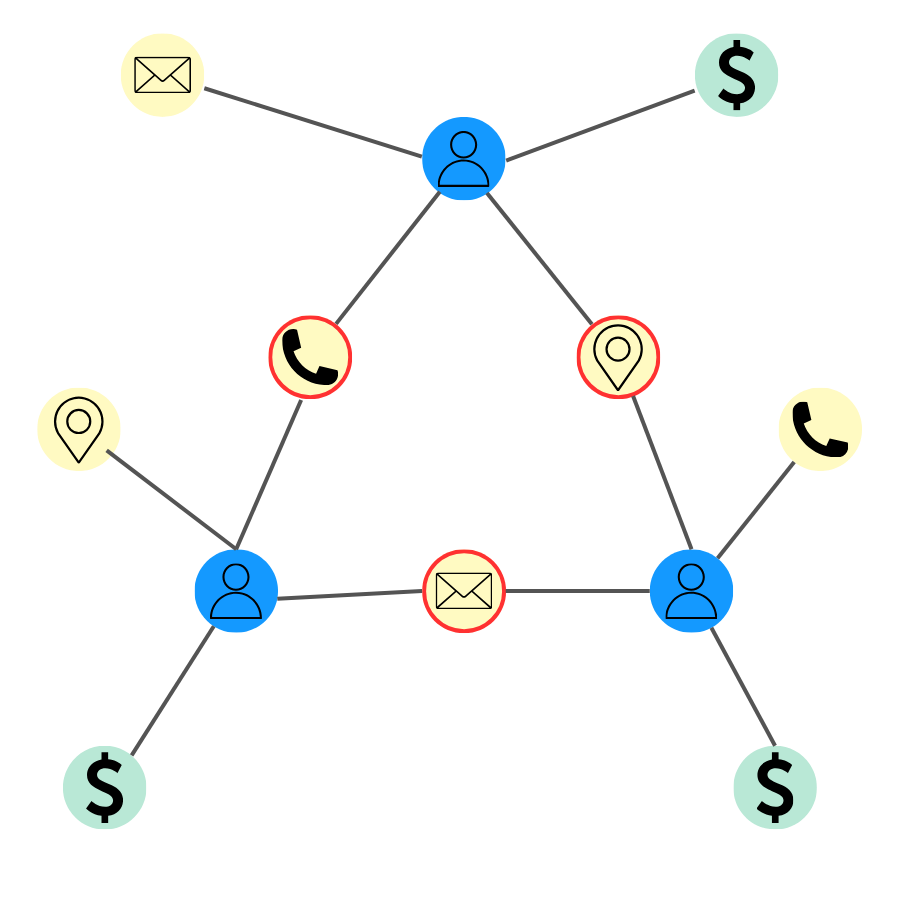

In a graph technology model, data is structured as a network. Individual data points - such as a person or a bank account - are stored as nodes, which are connected to each other by edges, representing the relationships between them. For example, a person has a bank account.

A graph analytics approach is able to combine data from multiple sources, such as customer databases and external data sources, and draw connections between the data within. In displaying both direct and indirect connections, this technology makes it far more effortless to detect complex fraud patterns that would have been difficult or impossible to uncover otherwise.

In the case of synthetic identity fraud, a graph can reveal in a second if there are pieces of data shared by multiple applicants or account holders. Several individuals interconnected across personally identifiable information such as an address, a phone number, a date of birth, etc. may be a red flag that a ring of fraudsters operating with synthetic identities.

Graph algorithms can also identify unusual application patterns, like spikes of applications from a certain location or using similar formatting. Linking these applications back to common devices or addresses again spotlights likely synthetic identities.

By visualizing these connections, analysts and investigators can quickly focus on high-risk groupings of applications and accounts. Timely interventions prevent fraud rings from inflicting major damage. Ongoing graph analysis also allows suspicious entities to be tracked over time even as stolen data elements are recombined into new synthetic identities.

Beyond detecting synthetic identities partially concocted using generative AI, graph technology is a powerful tool for automating anomaly detection more broadly. A graph data structure allows complex webs of entities and relationships to be modeled and analyzed. Since fraudsters typically rely on networks of fake identities, accounts, documents and corridors of illicit money flows, graph lends itself perfectly to unraveling fraud networks. Powerful pattern recognition capabilities can spot anomalies in these graphs that warrant further investigation.

For example, a graph could combine data related to customer identities, accounts, transactions, etc. Sophisticated graph algorithms can then detect suspicious patterns like abnormal flows of funds. These anomalous patterns send up a red flag for fraud analysts to investigate further.

By continually mapping new entity and relationship data into an evolving fraud graph, investigators can spotlight suspicious individuals, accounts, and activities that may be part of an AI-orchestrated scheme. Graph analytics shines a light into the interconnected dark spaces where fraud breeds – providing the visibility needed to fight back.

Graph visualization and analytics solutions like Linkurious Enterprise let you quickly adapt to emerging threats such as AI-fueled fraud, and quickly analyze new cases. By delivering a holistic view of the networks around clients and transactions, Linkurious Enterprise shines a light on the complex hidden connections in your data to reveal criminal behaviors that would otherwise go undetected. See how it works for yourself.

(1) https://www.pillsburylaw.com/en/news-and-insights/ai-regulations-us-eu-uk-china.html

(2) https://www.nytimes.com/2023/05/03/opinion/ai-lina-khan-ftc-technology.html

(3) https://www.bloomberg.com/news/articles/2023-08-21/money-scams-deepfakes-ai-will-drive-10-trillion-in-financial-fraud-and-crime?srnd=premium#xj4y7vzkg

(4) https://arstechnica.com/information-technology/2023/06/fears-grow-of-deepfake-id-scams-following-progress-hack/

A spotlight on graph technology directly in your inbox.